Google I/O 2025 focused less on ideas for tomorrow and more on tools you can actually use today. This year, the spotlight was on practical updates powered by AI, especially the Gemini model that’s becoming part of nearly everything Google touches. Whether you rely on Gmail, Search, or Android, these updates are already rolling out—many of them live as you read this. The shift is not just technical; it’s designed to make everyday tasks quicker and less tedious. From smarter search results to intelligent writing tools, here’s what’s new and already available to try right now.

All the New Google I/O Features You Can Try Right Now

Gemini Comes to More Apps—and It's Not Just Chat

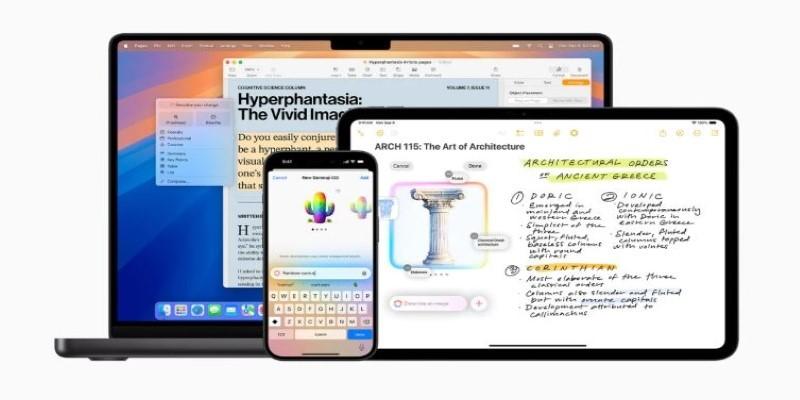

Gemini is now part of Gmail, Docs, Sheets, and Slides in a more useful way. You can type a few words in Gmail and get a draft, or have Gemini rewrite a paragraph in Docs to match your preferred tone. In Sheets, it helps make sense of raw numbers by suggesting formulas or creating summaries. Slides can now suggest layouts or rewrite your speaker notes.

What makes this helpful is the way it links context between apps. For instance, a budget discussed in Docs can be used in a financial summary in Sheets. This integration is now available for both Workspace and personal Google accounts.

Search Gets AI Overviews—Without the Wait

Google Search now features AI Overviews, replacing simple answer boxes with full explanations. Ask something layered, like comparing energy sources or planning a trip with multiple stops, and the response is more structured. It combines several reliable sources to give a fuller, clearer answer.

What's useful is that it supports follow-up questions. You can ask a general question, then go deeper without repeating the topic. This makes Search feel more conversational and responsive. AI Overviews are already available in the U.S., with more countries to follow.

Circle to Search Now Supports Homework Help

Circle to Search is now smarter and expanding to more Android devices. Originally designed for quick web lookups, it now supports academic help. You can circle a math problem or a paragraph, and get a step-by-step breakdown or summary.

This makes it ideal for students or parents helping with assignments. Rather than giving just an answer, it explains the reasoning. The feature is available on newer Pixel and Samsung phones and will roll out to others soon through software updates.

Android Gets Smarter With Context-Aware Suggestions

Android 15 is focused on making your phone more responsive to what you're doing. If you’re talking about dinner plans in a chat, your phone might suggest calendar slots, directions, or nearby restaurants automatically. You won’t need to switch between apps or search manually.

Gemini Nano, a lighter version of the AI, powers much of this and runs on the device itself. That means fewer privacy concerns and faster responses. Android’s new AICore framework also lets developers bring this kind of AI into their apps. It's already active on the latest Pixel devices.

New NotebookLM Brings Better Research Tools

NotebookLM is now a public tool for anyone who needs help organizing and reviewing information. You can upload different types of documents, and Gemini will summarize them, pull out quotes, or create timelines.

Say you’re writing a report with multiple sources—NotebookLM can answer questions based on the files you've uploaded. It's particularly helpful for research, whether you're a student or a working professional. This tool works with Google Docs, Drive files, and other text formats, and it's available now.

Project Astra: AI With Eyes and Ears

One of the most intriguing updates was Project Astra, a system designed to understand the world visually and audibly. In a live demo, it identified items in a room and remembered where they were placed. Ask it where your keys are, and it recalls where it last saw them.

Though still in development, some of Astra’s early features are becoming available in the Gemini app and through Google Lens. The idea is to bring visual context into how you use AI—answering not just questions you type, but ones based on what you see. Wider access is expected later this year.

Chrome Gets Writing Help and Web Summaries

Chrome now includes new tools to help with online reading and writing. When filling out forms or writing comments, Gemini can help generate replies. It’s useful for things like customer service messages or professional bios.

For harder-to-read content, there's a new "Help me understand" feature that simplifies complex web pages. It breaks them down into main points, making research easier and quicker. This is already live in Chrome for desktop, with mobile support on the way.

Google Photos’ Magic Editor Now Widely Available

Magic Editor is now part of the Photos app for more users. It allows you to move objects, adjust lighting, or even fill in parts of an image with AI-generated content. You can remove unwanted items from a shot and make it look natural without using third-party software.

This editing tool used to be exclusive to the Pixel 8, but it's now rolling out to other phones. Some features remain limited to users with a Google One subscription, but basic editing options are more widely available.

Conclusion

The biggest takeaway from Google I/O this year is how quickly these new AI features are becoming part of everyday tools. They aren’t buried in experimental apps or future releases—they’re built into the services you already use. Whether it’s writing help in Gmail, smarter responses in Search, or homework support through Circle to Search, these upgrades are here now. Gemini stands at the center of these changes, and its integration across Google’s ecosystem is only growing. Instead of adding complexity, it’s helping simplify daily tasks. If you use Android, Chrome, or Google Workspace, this shift is already affecting how you work and communicate. There’s more coming, but plenty is ready today.