When it comes to AI-generated images, there’s always a bit of a tug-of-war between quality and speed. Some models are fast but blurry, others take forever but produce sharp results. But then came VQ-Diffusion — a method that’s changing how people think about diffusion models, especially in the context of generating high-quality, discrete data like images, audio, and even text. So, what exactly is it? And why does it matter? Let’s break it down in plain words.

Think of it this way: older diffusion models work like slow sculptors. They start with a blob of noise and chisel away, step by step, until something meaningful emerges. This is great for continuous data, but not so much when you're dealing with categories, tokens, or anything that isn’t just raw pixel values. That’s where VQ-Diffusion flips the script.

A Quick Look at the Core Idea

Instead of working directly with pixels or waveforms, VQ-Diffusion plays with tokens — small, discrete building blocks. It uses a technique called vector quantization (VQ), where complex data is compressed into a fixed set of tokens taken from a codebook. Once the image (or sound or sentence) is broken into these tokens, the model learns how to generate them in the right order.

Why does this matter? Because modeling tokens instead of raw data allows VQ-Diffusion to handle structure much better. It’s like working with Lego blocks instead of clay — easier to organize, less messy, and a lot faster once you get the hang of it.

How VQ-Diffusion Actually Works

There are two main pieces at play here: vector quantization and diffusion. Let’s tackle each.

1. Vector Quantization (VQ)

This is where the input — an image, for example — gets turned into a grid of tokens. Each token represents a small chunk of the image and is selected from a learned codebook. The codebook has a fixed number of options, which means the model doesn’t need to guess from an infinite range — just pick the best fit from a pre-set list.

This step reduces the complexity. Instead of learning a million shades of color for each pixel, the model only needs to learn how to use a fixed group of symbols.

2. Diffusion on Tokens

Here’s where it gets more interesting. Traditional diffusion models work by gradually adding noise to the input and then learning how to reverse the process. In VQ-Diffusion, the same idea is applied, but on the token level. The model adds “token noise” — think of it like scrambling Lego blocks — and then learns to put them back in the correct order.

This process doesn’t need to be super slow. Since the model is dealing with discrete chunks instead of raw data, it can move faster and still keep the structure tight. That’s part of the appeal.

What Makes VQ-Diffusion Stand Out

There are already a lot of diffusion models out there. So what makes this one worth your attention? Let’s look at some of the key reasons why researchers and developers are turning their heads.

Better Structure Handling

One of the big problems with raw diffusion is that it doesn't always understand structure. You might get an image that looks fine at first glance but has strange distortions when you zoom in, like an extra finger or a warped background. VQ-Diffusion avoids this by modeling structure at a higher level. Because it’s building from tokens, it learns to respect edges, shapes, and layouts more naturally.

Faster Training and Sampling

Working with a limited set of tokens also makes training more efficient. There’s less guesswork involved, and the model converges quicker. And when it comes to sampling — generating new data — VQ-Diffusion needs fewer steps to produce something decent. That’s a win for developers who want both speed and quality.

Supports More Than Just Images

While a lot of attention has been on image generation, the idea behind VQ-Diffusion isn’t tied to any one medium. Audio, video, text — anything that can be tokenized can benefit from this approach. This opens up possibilities for more structured music synthesis, coherent story generation, and even hybrid formats like video with synchronized sound.

How to Build With VQ-Diffusion: A Simplified Step-by-Step Guide

If you’re the type who likes to get your hands dirty, here’s a high-level view of how you’d go about using VQ-Diffusion in a real project.

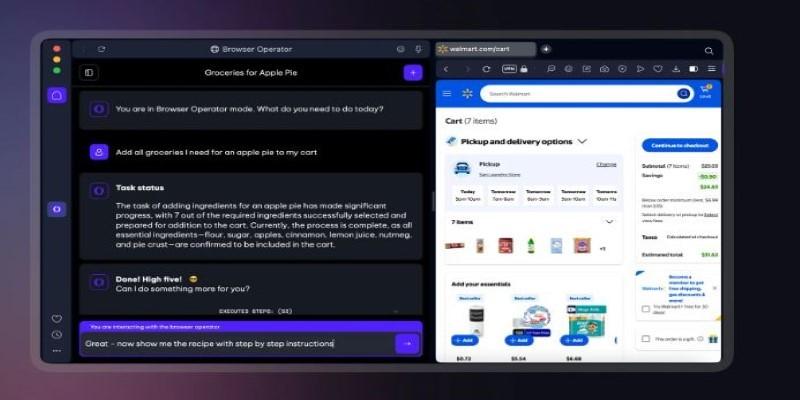

Step 1: Choose Your Tokenizer

Before you can run diffusion on tokens, you need to define them. That means training (or using a pre-trained) VQ-VAE or similar model to compress your data into discrete codes. These codes are the raw material that the diffusion model will work with.

Step 2: Set Up the Diffusion Process

Once you have the token sequences, you set up a forward process that gradually corrupts them, replacing correct tokens with random ones. This is your noise process.

Then comes the reverse process, which the model learns. Here, the job is to predict the original tokens step by step, starting from the corrupted version. You can think of it like solving a scrambled puzzle, one piece at a time.

Step 3: Train the Model

Now it’s training time. Feed the model a bunch of corrupted token sequences and ask it to predict what the clean version should be. Over time, it gets better at spotting patterns and guessing the right tokens.

Training can take a while, but it’s typically faster than pixel-level diffusion because the token space is smaller and more structured.

Step 4: Generate New Outputs

After training, you can start sampling. Pick a random sequence of tokens, and let the model reverse the diffusion steps until you get a coherent result. Then, decode it back to the original format using your VQ tokenizer.

And that’s it — you’ve just built a VQ-Diffusion pipeline.

Before We Wrap This Up

VQ-Diffusion doesn’t promise magic, but it does offer a smarter way to work with structured data. By shifting the focus from raw pixels to meaningful tokens, it bridges the gap between quality and speed in generative models. The results? Sharper images, clearer audio, and more coherent text, all with fewer steps and better structure.

It’s not the only model doing this kind of work, but its balance of efficiency and accuracy makes it one of the more exciting approaches out there. If you’re working in any field where structure matters — whether it's design, audio synthesis, or story generation — it might be worth taking a closer look.